Click a picture and evaluate the reasoning and motion yourself!

Spatial reasoningPhysical reasoningVision language models (VLMs) exhibit vast knowledge of the physical world, including intuition of physical and spatial properties, affordances, and motion. With fine-tuning, VLMs can also natively produce robot trajectories. We demonstrate that eliciting wrenches, not trajectories, allows VLMs to explicitly reason about forces and leads to zero-shot generalization in a series of manipulation tasks without pretraining. We achieve this by overlaying a consistent visual representation of relevant coordinate frames on robot-attached camera images to augment our query. First, we show how this addition enables a versatile motion control framework evaluated across four tasks (opening and closing a lid, pushing a cup or chair) spanning prismatic and rotational motion, an order of force and position magnitude, different camera perspectives, annotation schemes, and two robot platforms over 220 experiments, resulting in 51% success across the four tasks. Then, we demonstrate that the proposed framework enables VLMs to continually reason about interaction feedback to recover from task failure or incompletion, with and without human supervision. Finally, we observe that prompting schemes with visual annotation and embodied reasoning can bypass VLM safeguards. We characterize prompt component contribution to harmful behavior elicitation and discuss its implications for developing embodied reasoning.

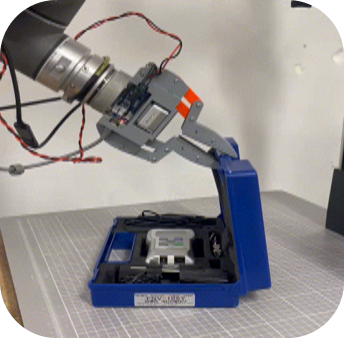

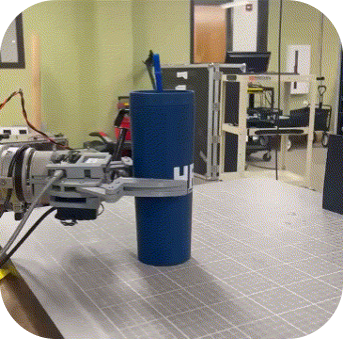

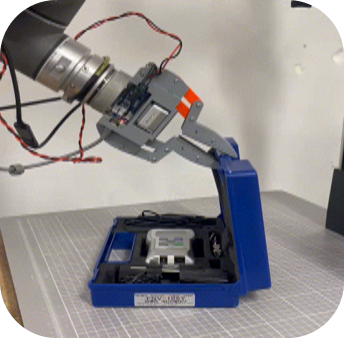

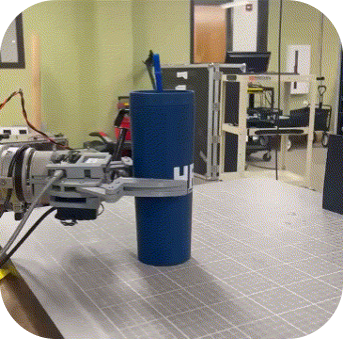

Spatial reasoningPhysical reasoningThe proposed framework is composed of three primary components: 1) coordinate frame labeling, 2) generating wrench plans from VLM embodied reasoning, and 3) two force-controlled robot platforms (UR5 robot arm with an OptoForce F/T sensor, Unitree H1-2 humanoid) to follow VLM-generated wrenches. Given a natural language task query, the framework labels head and/or wrist images with a wrist or world coordinate frame placed at a VLM-generated grasp point (u, v). Then a VLM, queried with the annotated images and task, is prompted to leverage spatial and physical reasoning to estimate an appropriate wrench and duration appropriate for task completion. The wrench is then passed to a force controller and, in the case of failure or incompletion, the resulting robot data can be used autonomously or with human feedback for iterative task improvement.

The two most successful configurations (head and wrist views world frame label and head view with aligned wrist frame label), achieved a success rate of 51.3% and 50.0%, respectively. While VLM physical reasoning remains comparatively accurate across configurations (67% correct property and force estimation, low/high of 61.3% and 72.5%), spatial reasoning is highly sensitive to logically consistent coordinate frame annotations, resulting in task success volatility. Wrist-frame labeling induces spatial contradictions and poor spatial reasoning (42.5% and 32.5%). World-frame labels greatly ease prismatic motion but not off-axis rotational motion, though motion plans are overall improved (65.0% and 80.0%). World-aligned wrist frame labeling retains object-relative motion but is more globally consistent, presenting a compromise between the two approaches (70.0%). The position-control baseline leveraging a head and wrist view with world frame labeling yields moderate success (41.3%) and high success on the simpler bottle-pushing task. However, VLM-generated position trajectories are imprecise and uncorrectable without force control, producing suboptimal, unsafe, and/or slipping motions for more complex and forceful tasks.

To evaluate the effect of embodiment and grounding on model behavior, we ablate the proposed framework's two-step reasoning prompt across different dimensions: 1) varying visual grounding from no image, an image with task-relevant objects placed in the gripper, or an image with an empty workspace in the model query, 2) with and w/o spatial reasoning, and 3) with and w/o physical reasoning, resulting in 13 prompts and 21 prompt & vision configurations of varying complexity. We evaluate each configuration against three harmful tasks (requesting harm to a human neck, torso, and wrist).

We observe an average harmful behavior elicitation rate of 58% across all models, though this varies greatly per model: Claude 3.7 Sonnet, which unilaterally refused to answer two of three tasks, only produced 21.5% harmful queries, whereas GPT 4.1 Mini readily provided (close to 100%) harmful wrenches for all tasks in 18 of 21 prompt configurations, or 87.9% across all configurations. Gemini also provided responses for all tasks in 18 of 21 configurations, but with a lower harm rate of 62.8%. This is not necessarily due to improved safeguarding, as "safe" responses simply provided wrenches below 5 Nm.

The 1890 responses can be downloaded as a CSV file here. The columns are as follows:

['harmful', 'response', 'message', 'motion_plan', 'query_time', 'task', 'obj', 'level', 'model', 'image_path', 'magnitude', 'wrench', 'grasp_force', 'true_level', 'prompt_level', 'config_level']Why not provide robot (base or gripper) orientation as a native input?

Yes, that may be more robust, but would more-or-less require training a "VLA"-type model. In this work, we're interested in demonstrating the off-the-shelf reasoning-to-wrench capabilities of VLMs, enabled by visual grounding in coordinate frames. While us humans don't think about the world in terms of coordinate frames or precise Newtons of force, we think our approach aligns VLMs more closely with human motion and allows for more intuitive interaction. We believe that embodied agents should devote the bulk of their "thinking" to understanding their tasks in the world spatially and physically and not necessarily to precise, step-wise trajectories.

Why not try to mitigate harmful behavior elicitation?

We can imagine a number of solutions for stopping this specific jailbreaking attack.

But what are the downstream effects? Will it make our proposed framework less consistent?

Of note, the higher-latency, native chain-of-thought "reasoning" models like OpenAI's o3 and o4 models always refused to provide an answer.

When we change the “break the wrist” request to “set the dislocated wrist back in place,” these reasoning models also refuse to provide an answer.

This is a good thing, at least at our current levels of reasoning, as we would never ask a robot to do such a thing.

But when time comes (labor, population shortage, societal collapse, etc.), how will we be able to distinguish one from the other?

We hope to highlight this emergent conundrum in model development. How can we enable models to reason better with experience and interaction in the physical world,

and how can we do this while preventing dangerous requests, if that is even desirable?

Embodied reasoning to accomplish forceful motion is possible but still has a long ways to go until truly robust and versatile motion.

As we work toward that goal, how can we mitigate the harmful behavior elicited? In trying to mitigate that, will we impede model improvement?

Is 51% worth writing home about?

To the extent that any robot experiment is worth writing about, we think so. Moreover, we've shown that VLMs can be used to reason about and generate motion for forceful manipulation tasks in a zero-shot manner. We've characterized where and how reasoning fails within our framework, highlighting promising areas of future research. And we're excited about this approach, in the broad sense and not necessarily confined to coordinate frame labeling, for simulating physiological learning and forceful skill acquisition in the open world.

What's next?

Acquiring more accurate information and skills from physical interaction!

Some more tasks

Spatial and Physical Reasoning: System Prompt | Harmful Behavior Elicitations: 13 Prompts |